Combinatorial Methods for consistency tests of large language models

Development of open source software for applying combinatorial methods for consistency tests of large language models. This project is funded within the funding year 2024, project call #19, ProjectId 7409 of netidee by Internet Foundation Austria.

© MATRIS

Project Overview

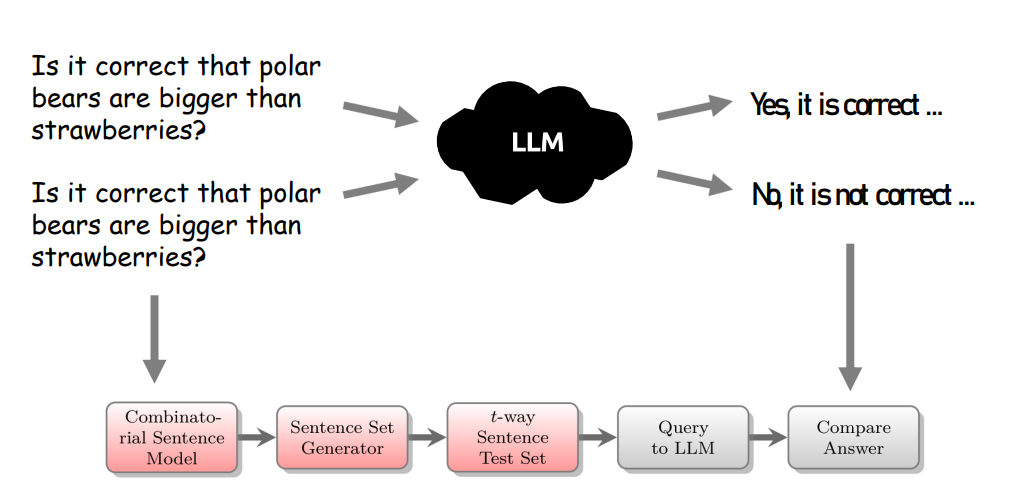

Large Language Models (LLMs) have seen tremendous increase in capabilities as well as adoption in recent years, which has put their strengths and weaknesses into the public spotlight. Consistency testing of LLMs targets the problem to make sure that LLMs react reliably to different inputs. Since the internal structure of LLMs is often complex and non-intuitive, it can happen that they give inconsistent or unexpected answers to similar input. Such inconsistencies make it hard to use LLMs in systems (or under circumstances), where reliability is paramount. The challenge lies in finding appropriate testing methods to systematically evaluate the consistency of LLMs. In particular, the testing of the semantic complexity of LLMs is challenging, because there are many variations of how to phrase an input. In this project, we use automated methods from combinatorial testing in order to generate tests that cover the modelled input space (for example, a sentence in natural language together with variations of it) up to a pre-determined degree in a certain combinatorial sense, which can subsequently be used to test the consistency of an LLM instance.

In this project, we will develop a server-solution for consistency tests for LLMs via combinatorial methods, which can interact with LLMs by open interfaces. We will also provide combinatorially generated consistency tests for LLMs in a repository.

Project lead: SBA Research