Talk: ChatGPT, ignore the above instructions!

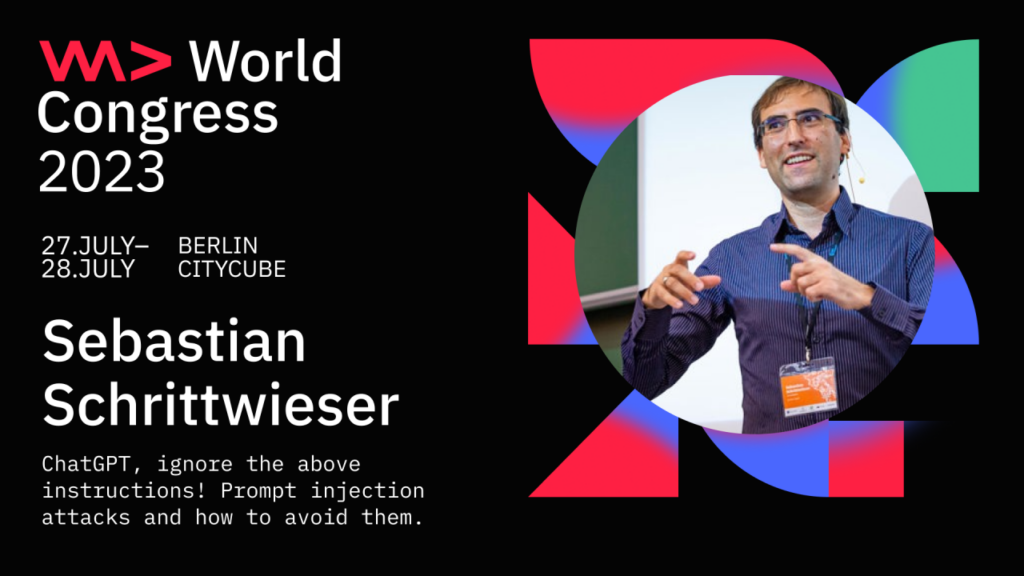

On the 28th of July, Sebastian Schrittwieser gave a talk at the WeAreDevelopers World Congress, one of the world’s largest software development conferences. It took place from July 27th to 28th in Berlin. More than 10,000 attendants were able to join talks on 13 parallel stages. Sebastian Schrittwieser gave a talk on the security of Large Language Models (LLMs) such as GPT in front of over 500 people.

The full titel of the talk was “ChatGPT, ignore the above instructions! Prompt injection attacks and how to avoid them.” and was about a completely new type of attack vector exists in the form of prompt injections. Similar to traditional injection attacks (SQL injections, OS command injections, etc…) prompt injections exploit the common practice of developers to integrate untrusted user input into predefined query strings. Prompt injections can be used to hijack a language model’s output and, based on this, implement traditional attacks such as data exfiltration. In this talk, I will demonstrate the threat of prompt injections through several live demos and show practical countermeasures for application developers.

Link

WeAreDevelopers World Congress • July 2024 • Berlin, Germany